Fitting AI models in your pocket with quantization - Stack Overflow

By A Mystery Man Writer

generative AI - Stack Overflow

Can you work on conversational AI at home? - Quora

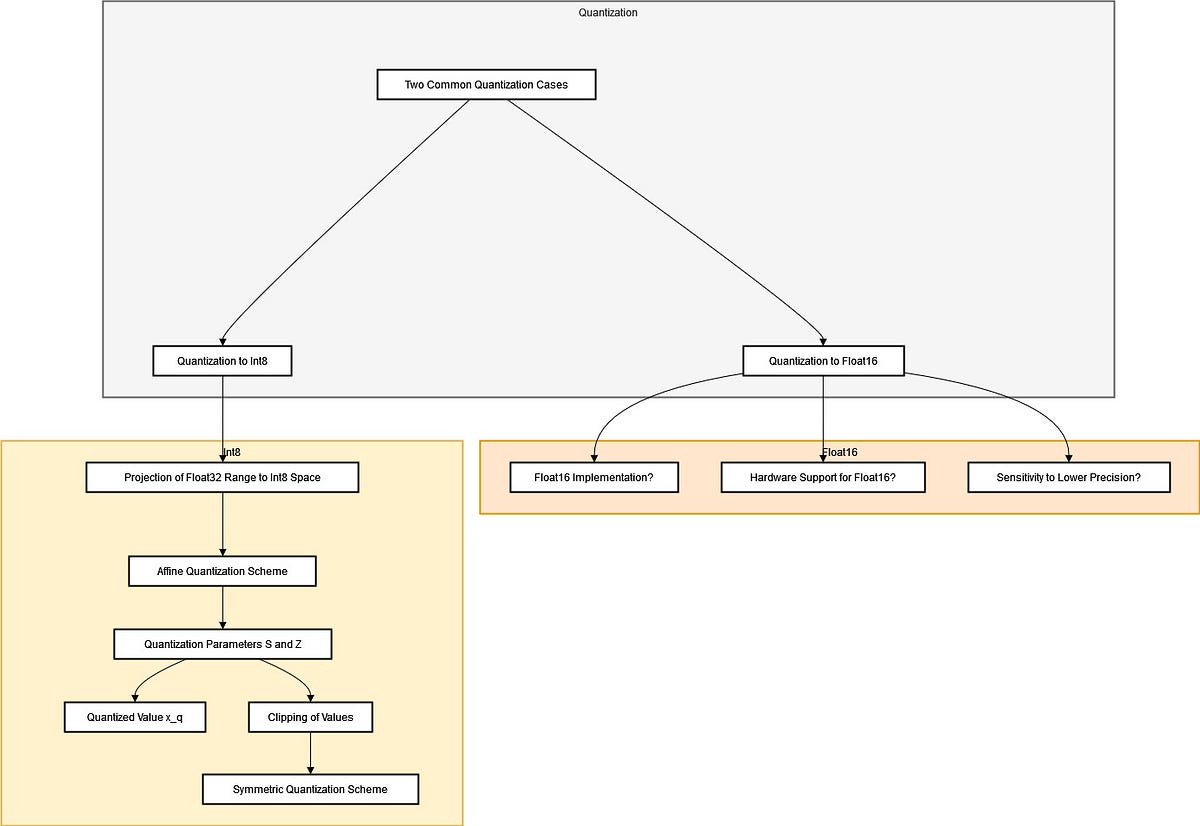

Understanding Quantization: Optimizing AI Models for Efficiency

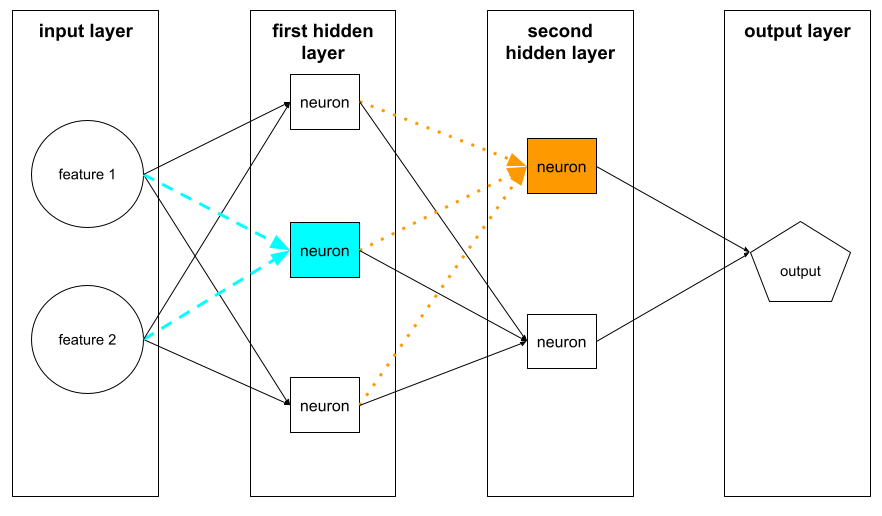

How is the deep learning model (its structure, weights, etc.) being stored in practice? - Quora

Ronan Higgins (@ronanhigg) / X

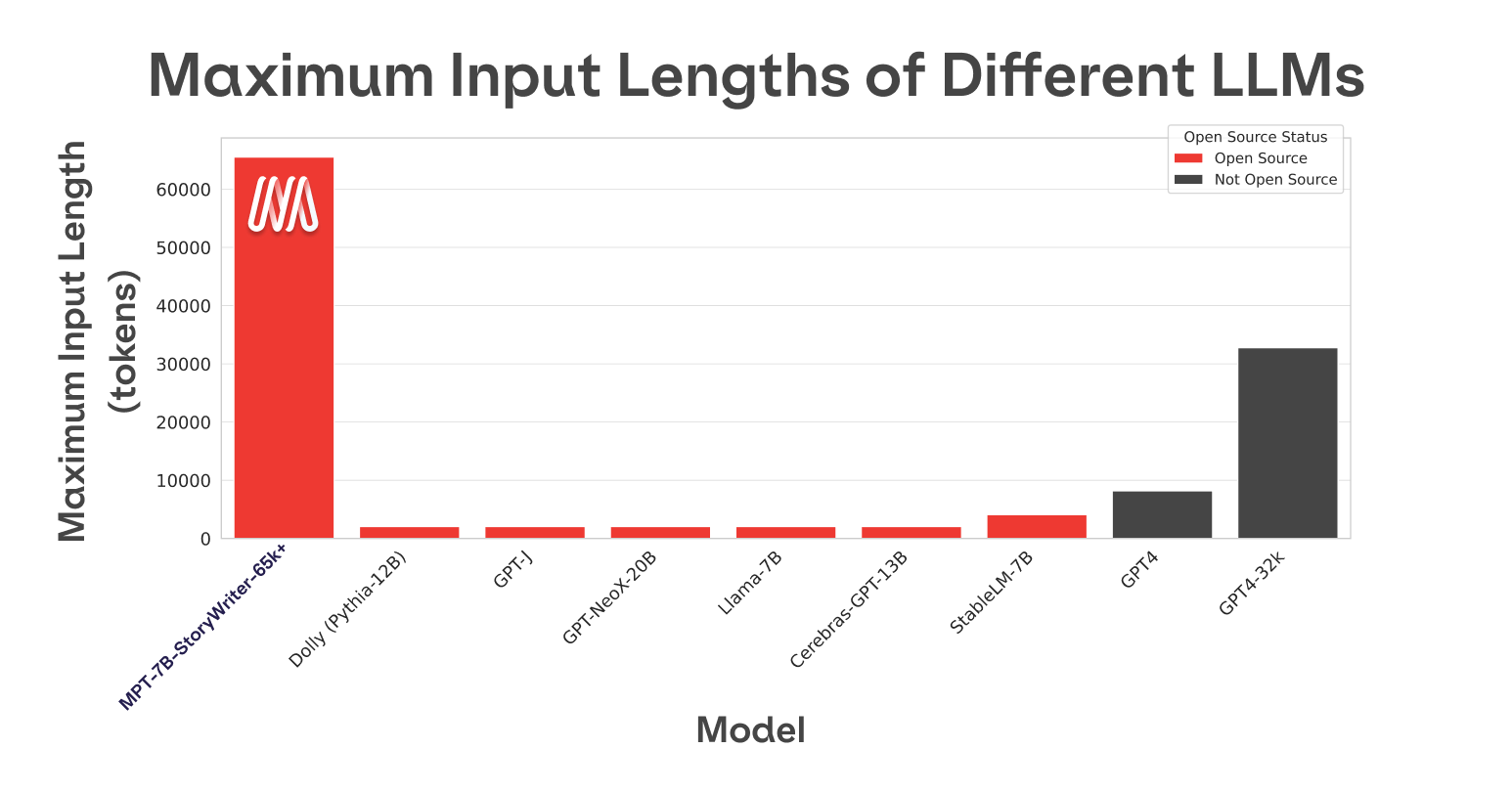

MPT-7B and The Beginning of Context=Infinity — with Jonathan

The New Era of Efficient LLM Deployment - Gradient Flow

/static/machine-learning/glos

Fitting AI models in your pocket with quantization - Stack Overflow

TensorFlow vs. PyTorch: Which Framework Has the Best Ecosystem and Community?, by Jan Marcel Kezmann

I want to use Numpy to simulate the inference process of a quantized MobileNet V2 network, but the outcome is different with pytorch realized one - Stack Overflow

Filip Lange on LinkedIn: At lightspeed. Looking forward for the unlimited possibilities in…

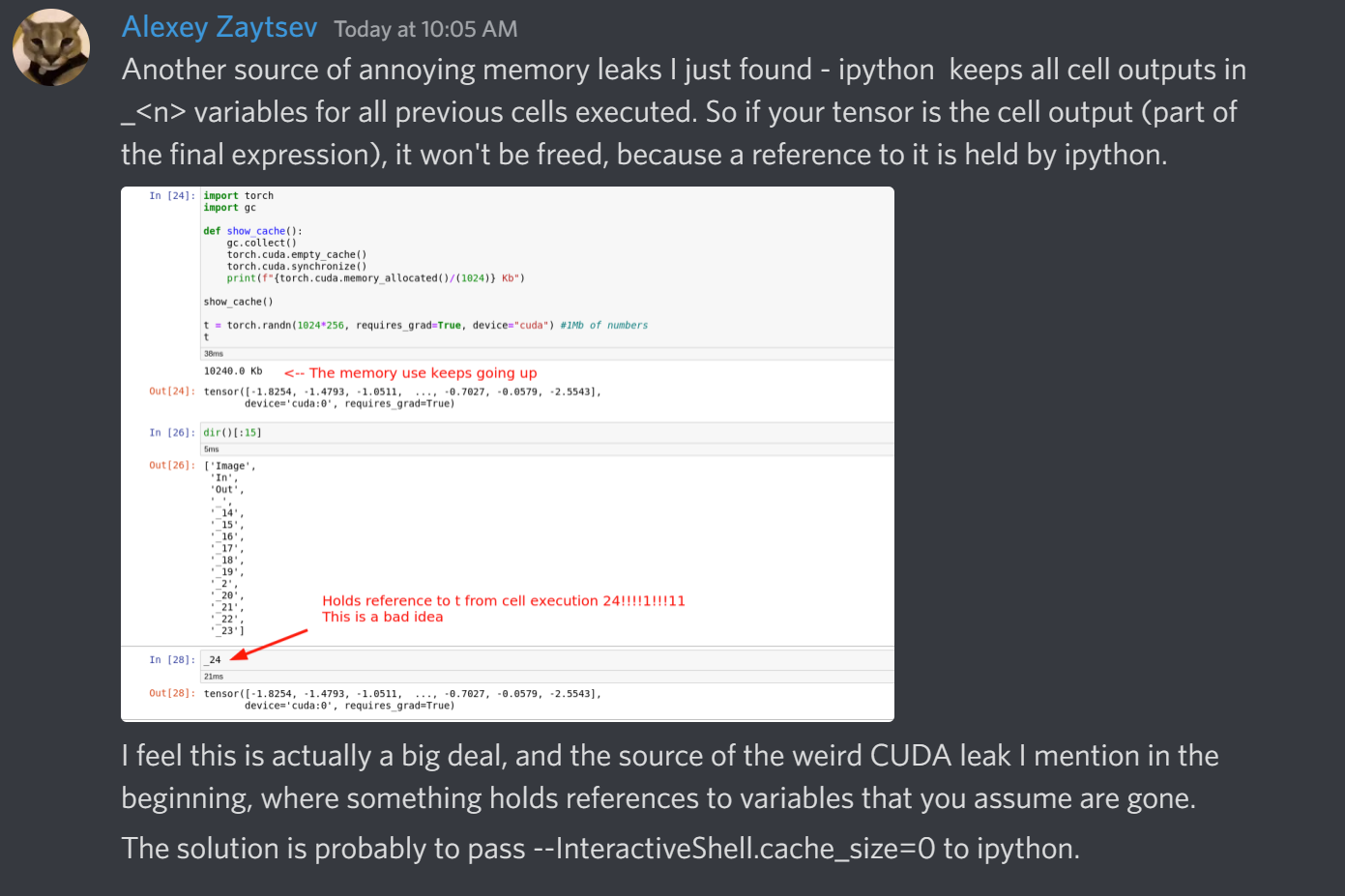

Solving the “RuntimeError: CUDA Out of memory” error, by Nitin Kishore